Steve Etter

Lifetime Supporting Member + Moderator

I’m trying to get a better understanding of Ethernet/IP networking and how IGMP snooping works with multicast communications. Primarily, I’m trying to understand how to know what device will see a given multicast signal and which will not.

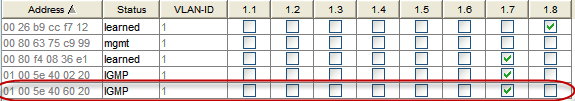

As I understand it, in a general sense, a managed switch that uses IGMP snooping controls where multicast signals are sent by first querying the devices connected to it and creating a table of which devices want to receive multicast signals. If they are on the list, they receive them, if not, they don’t.

Ok. So assuming I’m not too far off base here, how does a device know it wants to be on a specific list? What is the base configuration? Is it the sub-net mask or something else?

So, if I have two PLC Processors connected to a common network with the same sub-net mask but each behind a separate, IGMP snooping enabled managed switch, how can I be sure that multicast messages from a given remote I/O for one processor is not being seen by the other? How do the switches distinguish between the two?

I've read what I can find on the web but nothing I've found seems to explain what is used to define this differentiation.

I hope this makes sense.

As I understand it, in a general sense, a managed switch that uses IGMP snooping controls where multicast signals are sent by first querying the devices connected to it and creating a table of which devices want to receive multicast signals. If they are on the list, they receive them, if not, they don’t.

Ok. So assuming I’m not too far off base here, how does a device know it wants to be on a specific list? What is the base configuration? Is it the sub-net mask or something else?

So, if I have two PLC Processors connected to a common network with the same sub-net mask but each behind a separate, IGMP snooping enabled managed switch, how can I be sure that multicast messages from a given remote I/O for one processor is not being seen by the other? How do the switches distinguish between the two?

I've read what I can find on the web but nothing I've found seems to explain what is used to define this differentiation.

I hope this makes sense.