Good afternoon everyone,

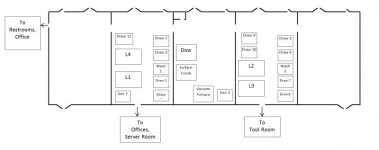

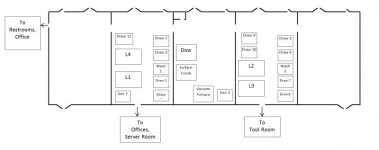

I am thinking about a new physical network layout for our plant. I have attached a diagram of our floor plan. The five bays are 40'x60' each. Current network is as follows:

Everything labeled "Draw #" and "Gen #" is currently on a common RS485 serial link that runs to our current SCADA server. Everything labeled "L#", "Dow", and "Surface Comb" have individual serial links to the SCADA server. The Vacuum Furnace has an HMI, PLC and unmanaged switch inside of it's control cabinet. We also have an ethernet link (on a dedicated card) directly from the SCADA server to the vacuum furnace. A separate ethernet card connects the SCADA server to the office network.

Each piece of equipment is a standalone part of our operation. None of the pieces talk to each other or need to share data.

We also have WiFi access points located at the entrance to the Server Room and Tool Room. These to a fair job of covering the whole building, with the exception of the office area near the bathrooms. The WiFi access points are connected to the office network.

With the backstory out of the way, I am considering installing new infrastructure because we are adding a new piece of equipment in the far right bay and it has caused me to start comparing the current state of affairs to the future. The new piece will have a PLC and HMI connected via ethernet similar to the vacuum furnace. I would like to connect them to the SCADA system over ethernet as well, but don't currently have any network backbone in the area. Also, down the road, I will likely be upgrading the controls in the existing pieces of equipement and those links will likely be best on ethernet. Finally, sometime in the future I would like to upgrade our SCADA server itself. Currently, it is just a desktop Dell computer with old SCADA software that doesn't really do all the things I would like it to do. I would like to eventually transition to Ignition. The office network is currently served by a decently specced Dell server that has VMWare ESXi running. It has two independent network cards and is expandable enough to cover the hardware needs of running Ignition, I think. Other thoughts for the future include VOIP phone system, and IP based security cameras.

What I am considering doing is installing an enclosure in each bay with a managed switch inside. I would run an ethernet line between them and another managed switch in the server room. (Another possibility is a trunk link that daisy chains the switches together, possibly with a link to the server room at both ends for redundancy). Then, any piece of equipment that needs to be added to the SCADA Ethernet network can connect to the box in the room. If we need to add additional workstations for SCADA access, VOIP phones, cameras, or whatever, we can connect them to the box in the room as well (using VLANs to separate traffic as needed.)

I'm thinking this should be a decent layout. I'm still a bit fuzzy as to where routers would be necessary, and whether each machine should have a mananged switch to handle internal PLC-HMI traffic.

If it makes a difference, we are mostly an Automation Direct shop. The older controls that we will be replacing are Honeywell and Eurotherm stuff. Everything right now talks Modbus/RTU (or Modbus/TCP in the case of the vacuum furnace).

Questions and comments are greatly appreciated. I'll take brand recommendations into consideration, but as with all small businesses, I will also need to take cost into consideration as well.

Thanks,

Brian

I am thinking about a new physical network layout for our plant. I have attached a diagram of our floor plan. The five bays are 40'x60' each. Current network is as follows:

Everything labeled "Draw #" and "Gen #" is currently on a common RS485 serial link that runs to our current SCADA server. Everything labeled "L#", "Dow", and "Surface Comb" have individual serial links to the SCADA server. The Vacuum Furnace has an HMI, PLC and unmanaged switch inside of it's control cabinet. We also have an ethernet link (on a dedicated card) directly from the SCADA server to the vacuum furnace. A separate ethernet card connects the SCADA server to the office network.

Each piece of equipment is a standalone part of our operation. None of the pieces talk to each other or need to share data.

We also have WiFi access points located at the entrance to the Server Room and Tool Room. These to a fair job of covering the whole building, with the exception of the office area near the bathrooms. The WiFi access points are connected to the office network.

With the backstory out of the way, I am considering installing new infrastructure because we are adding a new piece of equipment in the far right bay and it has caused me to start comparing the current state of affairs to the future. The new piece will have a PLC and HMI connected via ethernet similar to the vacuum furnace. I would like to connect them to the SCADA system over ethernet as well, but don't currently have any network backbone in the area. Also, down the road, I will likely be upgrading the controls in the existing pieces of equipement and those links will likely be best on ethernet. Finally, sometime in the future I would like to upgrade our SCADA server itself. Currently, it is just a desktop Dell computer with old SCADA software that doesn't really do all the things I would like it to do. I would like to eventually transition to Ignition. The office network is currently served by a decently specced Dell server that has VMWare ESXi running. It has two independent network cards and is expandable enough to cover the hardware needs of running Ignition, I think. Other thoughts for the future include VOIP phone system, and IP based security cameras.

What I am considering doing is installing an enclosure in each bay with a managed switch inside. I would run an ethernet line between them and another managed switch in the server room. (Another possibility is a trunk link that daisy chains the switches together, possibly with a link to the server room at both ends for redundancy). Then, any piece of equipment that needs to be added to the SCADA Ethernet network can connect to the box in the room. If we need to add additional workstations for SCADA access, VOIP phones, cameras, or whatever, we can connect them to the box in the room as well (using VLANs to separate traffic as needed.)

I'm thinking this should be a decent layout. I'm still a bit fuzzy as to where routers would be necessary, and whether each machine should have a mananged switch to handle internal PLC-HMI traffic.

If it makes a difference, we are mostly an Automation Direct shop. The older controls that we will be replacing are Honeywell and Eurotherm stuff. Everything right now talks Modbus/RTU (or Modbus/TCP in the case of the vacuum furnace).

Questions and comments are greatly appreciated. I'll take brand recommendations into consideration, but as with all small businesses, I will also need to take cost into consideration as well.

Thanks,

Brian