Ken Roach

Lifetime Supporting Member + Moderator

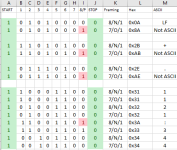

In general, a single data byte in RS-232 consists of:

Start Bit

Data Bits

Parity Bit

Stop Bits

The settings that apparently work between the PC and MGI are one Start bit, Seven Data bits, one Odd Parity bit, and two Stop bits. "7/O/2" adds up to an 11-bit frame.

The settings that apparently makes data visible in Tera Term is one Start bit, Seven Data bits, no Parity bit, and two Stop bits. "7/N/2" adds up to a 10-bit frame.

But from what I'm reading, the 5069-SERIAL gives framing errors when set for 7/N/2 and connected to the "sniffer" wiring.

There could be two sources for those framing errors: an incorrect Parity bit, or fewer than two Stop bits. The module has a specific parity error indication in the status tags, "Ix.ASCII.ParityError", and another for different framing errors, "Ix.ASCII.FramingError".

Some devices just don't support 11-bit serial framing. The most common serial framing is "8/N/1", which is 10-bit.

But most devices do. A typical FTDI serial/USB chipset can use either one. The 5069-SERIAL user manual says that it supports both. Some virtual serial ports don't support parity bits.

I personally prefer RealTerm to TeraTerm. Despite its kludgy interface it works well at a very low level with the handshaking and framing.

If this were my system, I probably would have broken out the CleverScope, which can be set to decode RS-232 frames inline. Pretty cool.

At least make sure when you change the framing settings on the 5069-SERIAL that you give it a full reset, maybe even a reboot. It *should* handle framing changes with grace, but you never know.

Start Bit

Data Bits

Parity Bit

Stop Bits

The settings that apparently work between the PC and MGI are one Start bit, Seven Data bits, one Odd Parity bit, and two Stop bits. "7/O/2" adds up to an 11-bit frame.

The settings that apparently makes data visible in Tera Term is one Start bit, Seven Data bits, no Parity bit, and two Stop bits. "7/N/2" adds up to a 10-bit frame.

But from what I'm reading, the 5069-SERIAL gives framing errors when set for 7/N/2 and connected to the "sniffer" wiring.

There could be two sources for those framing errors: an incorrect Parity bit, or fewer than two Stop bits. The module has a specific parity error indication in the status tags, "Ix.ASCII.ParityError", and another for different framing errors, "Ix.ASCII.FramingError".

Some devices just don't support 11-bit serial framing. The most common serial framing is "8/N/1", which is 10-bit.

But most devices do. A typical FTDI serial/USB chipset can use either one. The 5069-SERIAL user manual says that it supports both. Some virtual serial ports don't support parity bits.

I personally prefer RealTerm to TeraTerm. Despite its kludgy interface it works well at a very low level with the handshaking and framing.

If this were my system, I probably would have broken out the CleverScope, which can be set to decode RS-232 frames inline. Pretty cool.

At least make sure when you change the framing settings on the 5069-SERIAL that you give it a full reset, maybe even a reboot. It *should* handle framing changes with grace, but you never know.

Last edited: