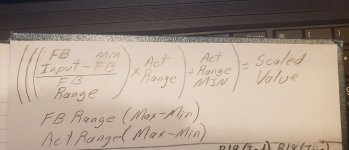

Jsu 0234M, your formulae is quite straight forward. Does it for all analog quantities like pressure, weight, temperature, strain etc?

What is the margin of error if any

This is an interesting question.

The margin of error is wholly dependent on how linear the [transducer+A/D] system is in converting the physical phenomenon into raw counts, so there is no single answer. For many measurements (pressure, weight, strain), it is "close enough," or if not, it may be close enough over a limited range (e.g. thermocouples, but they are usually handled differently because of the known characteristics of the various metals involved).

Most measurement vendors quote an accuracy in their literature, but to definitively answer that question, you need to do a proper calibration on every system at several points over your range of interest.

Now all of that is indeed interesting, but my real question is, why would someone who seems to have responsibility for a measurement system be asking this question in the first place? Did they even understand what I meant by "linear" above?

I am not trying to demean the OP or their educational background, but one of the fundamental concepts of engineering is proportions, and anyone who understands proportions would not be asking the original question. And although you will get an answer here (the many versions of the formula posted in response), I am concerned about the difference between having a formula and understanding a formula.

With that in view, would the OP mind providing the context for their question?